In this experiment, I will instead choose to use the previous k words as my current state, and model the probabilities of the next token.

I’ve already gone in-depth on this for my LSTM for Text Generation article, to mixed results. We could do a character-based model for text generation, where we define our state as the last n characters we’ve seen, and try to predict the next one.

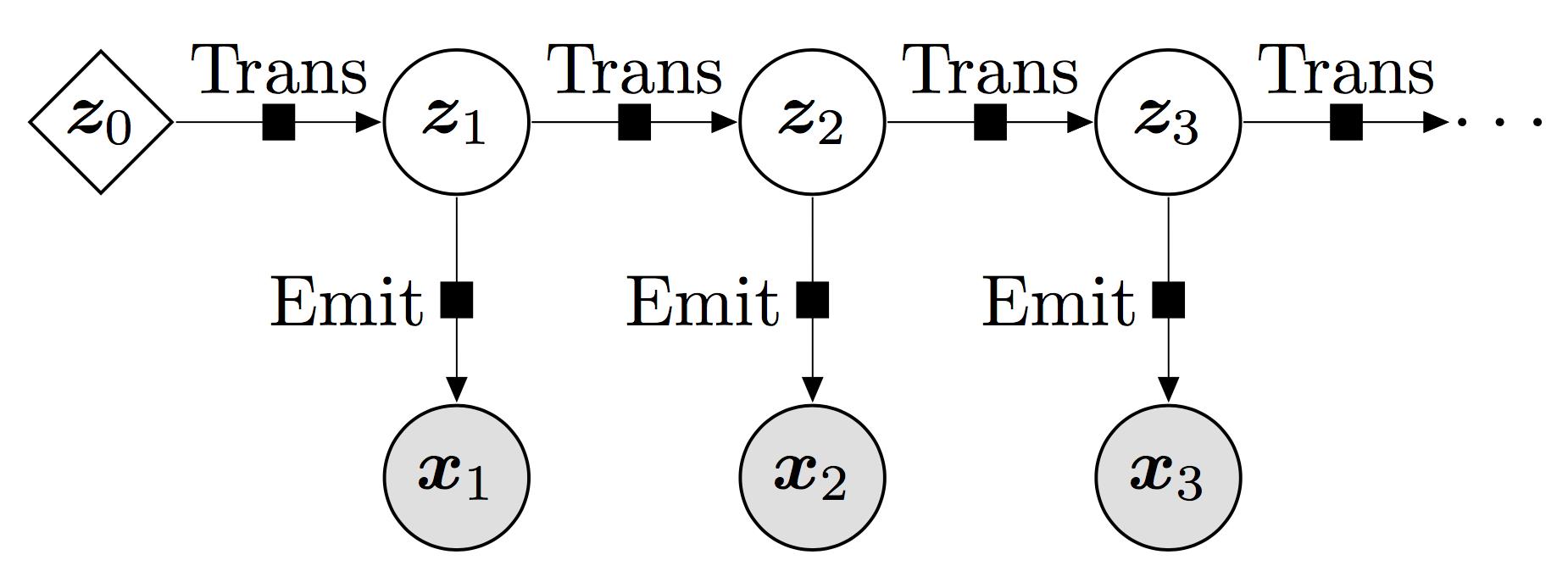

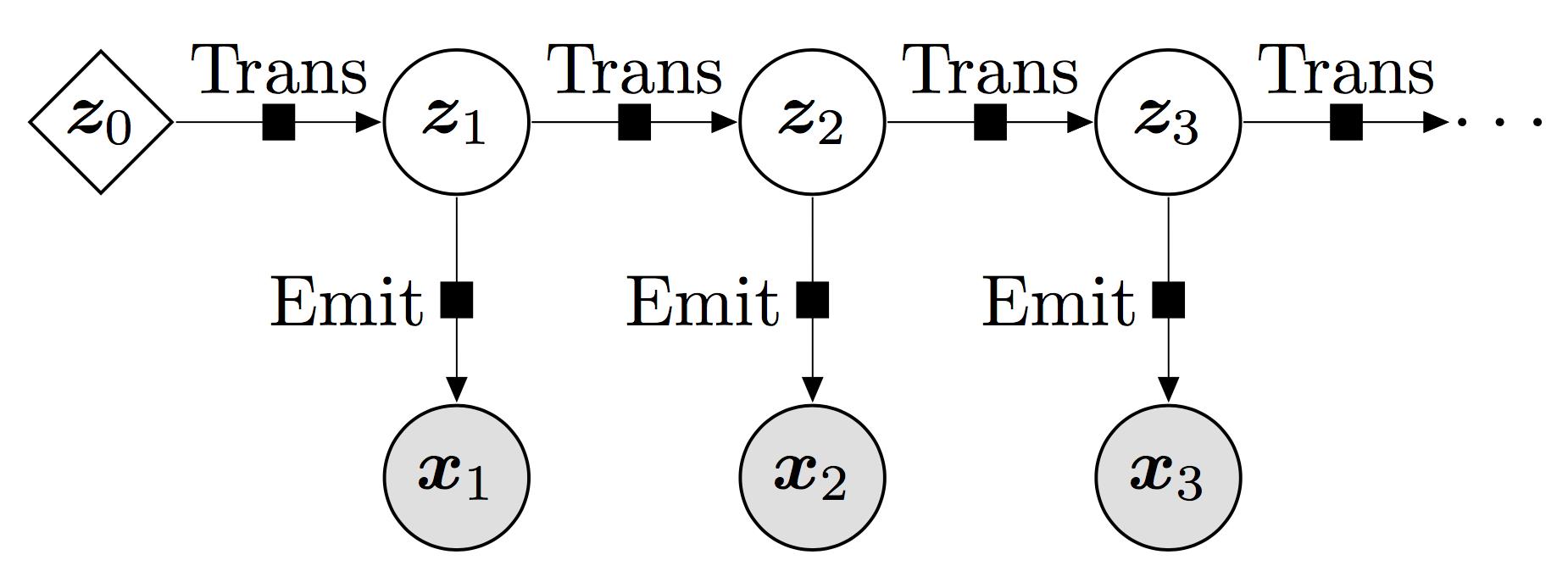

What probabilities will we assign to jumping from each state to a different one?. In order to generate text with Markov Chains, we need to define a few things: Then, if we represent the current state as a one-hot encoding, we can obtain the conditional probabilities for the next state’s values by taking the current state, and looking at its corresponding row.Īfter that, if we repeatedly sample the discrete distribution described by the n-th state’s row, we may model a succession of states of arbitrary length. We can express the probability of going from state a to state b as a matrix component, where the whole matrix characterizes our Markov chain process, corresponding to the digraph’s adjacency matrix. Here’s an example, modelling the weather as a Markov Chain. To show what a Markov Chain looks like, we can use a digraph, where each node is a state (with a label or associated data), and the weight of the edge that goes from node a to node b is the probability of jumping from state a to state b. What this means is, we will have an “agent” that randomly jumps around different states, with a certain probability of going from each state to another one. A Markov Chain is a stochastic process that models a finite set of states, with fixed conditional probabilities of jumping from a given state to another.

0 kommentar(er)

0 kommentar(er)